10 Traditional Survival Models

This page is a work in progress and minor changes will be made over time.

In a predictive setting it can be easy to dismiss ‘traditional models’ and favour testing of more modern ‘machine learning’ tools; this would be a mistake. Firstly, on low dimensional data (small number of variables), traditional methods often outperform machine learning models (Burk et al. 2024; Beaulac et al. 2020). Secondly, even on high-dimensional data, several papers have demonstrated that augmenting traditional models (Section 10.6) can yield models that outperform machine learning alternatives (Zhang et al. 2021; Spooner et al. 2020). Finally, the majority of machine learning survival algorithms make use of these traditional models, for example by using non-parametric estimators (Section 10.1) and/or assuming a proportional hazards form (Section 10.2), as a central component to construct an algorithm around. Therefore, a robust understanding of these models is imperative to fairly construct and evaluate machine learning survival models. This chapter begins with demonstrating non-parametric estimators as predictive tools, including a recap of some estimators in Chapter 3. Semi- and fully-parametric models are then introduced, most notably the Cox proportional hazards model and the accelerated failure time model. Finally, methods to improve traditional models through machine learning methodology is presented.

10.1 Non-Parametric Estimators

Non-parametric estimators have already been introduced in Section 3.5.2, therefore this section is brief and focuses only on how these estimators can be used as predictive models.

10.1.1 Unconditional estimators

Recall from Section 3.5.2 the Kaplan-Meier and Nelson-Aalen estimators respectively defined by

\[ S_{KM}(\tau) = \prod_{k:t_{(k)} \leq \tau}\left(1-\frac{d_{t_{(k)}}}{t_{(k)}}\right) \tag{10.1}\]

and

\[ H_{NA}(\tau) = \sum_{k:t_{(k)}\leq \tau} \frac{d_{t_{(k)}}}{n_{t_{(k)}}}. \]

where \(d_{t_{(k)}}\) and \(n_{t_{(k)}}\) are the number of events and observations at risk at the \(k\)th ordered event time, \(t_{(k)}\) respectively.

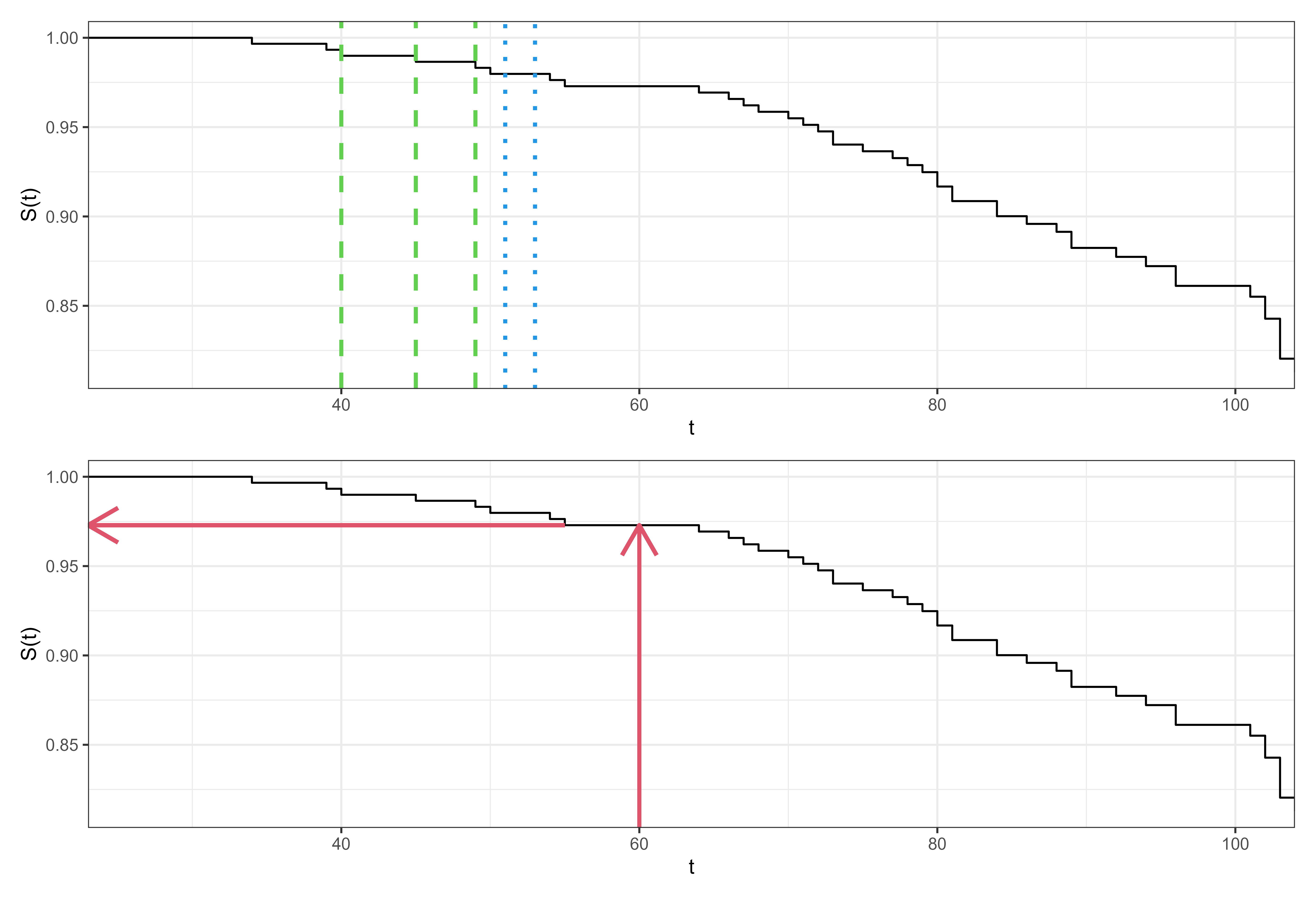

For example, Figure 10.1 shows the Kaplan-Meier estimator fit on the rats (Mantel, Bohidar, and Ciminera 1977) dataset. The top image displays how the estimator is a step function with steps occurring at event times (some examples in green dashed lines). At censoring times, the estimator stays constant (examples in blue dotted lines). The bottom image displays how to use the estimator as a predictive tool. To predict the survival probability of a new rat, one can find the estimated survival probability at a given time from the trained estimator, without needing any more details about the rat in question (as covariates are ignored). This provides a quick tool that tends to be well-calibrated to the average observation.

As predictive models, these can also be extended to other censoring and truncation types as well as event history analysis more generally from using the estimators defined in Section 3.5.2, Section 4.2.2, and Section 4.3.4.

10.1.2 Conditional estimators

As well as unconditional estimators, which do not account for covariates, an alternative is the conditional Akritas estimator (Akritas 1994) usually defined by (Blanche, Dartigues, and Jacqmin-Gadda 2013):

\[ S(\tau|\mathbf{x}^*, \lambda) = \prod_{k:t_{(k)}\leq \tau} \Big(1 - \frac{\sum^n_{i=1} K(\mathbf{x}^*,\mathbf{x}_i|\lambda)\mathbb{I}(t_i = t_{(k)}, \delta_i = 1)}{\sum^n_{i=1} K(\mathbf{x}^*,\mathbf{x}_i|\lambda)\mathbb{I}(t_i \geq t_{(k)})}\Big) \tag{10.2}\] where \(K\) is a kernel function, usually \(K(x,y|\lambda) = \mathbb{I}(\lvert \hat{F}_X(x) - \hat{F}_X(y)\rvert < \lambda), \lambda \in (0, 1]\), \(\hat{F}_X\) is the empirical distribution function of the data, and \(\lambda\) is a hyper-parameter. The estimator can be interpreted as a conditional Kaplan-Meier estimator which is computed on a neighbourhood of subjects closest to \(\mathbf{x}^*\). In fact, if \(\lambda = 1\) then \(K(\cdot|\lambda) = 1\) and (10.2) is identical to (10.1).

The formulation in (10.2) includes fitting and predicting in one step as the usual application of the model is as a non-parametric estimator. By first estimating \(\hat{F}_X\) on separate training data, the estimator can be used as a baseline predictive model.

10.2 Proportional Hazards

This section begins with an introduction to the proportional hazards concept, introduces estimation with the Cox PH model, and then moves to fully parametric proportional hazards models, with the Weibull model as a motivating example.

Let \(\eta_i = \mathbf{x}_i^\top\boldsymbol{\beta}\) be the linear predictor for some observation \(i\) with covariates \(\mathbf{x}_i\) and model coefficients \(\boldsymbol{\beta}\in \mathbb{R}^p\), then proportional hazards (PH) models assume that the hazard function for \(i\) follows the form \[ h_{PH}(\tau|\mathbf{x}_i)= h_0(\tau)\exp(\eta_i) \tag{10.3}\]

or equivalently:

\[ H_{PH}(\tau|\mathbf{x}_i)= H_0(\tau)\exp(\eta_i) \tag{10.4}\]

and

\[ S_{PH}(\tau|\mathbf{x}_i)= S_0(\tau)^{\exp(\eta_i)} \tag{10.5}\]

\(h_0,H_0,S_0\) are referred to as the baseline hazard, cumulative hazard, and survival function respectively. Instead of modelling a separate intercept, the baseline hazard represents the hazard when the linear predictor is zero, hence the term ‘baseline’. Note that the baseline hazard may not have a meaningful interpretation, unless the covariates are all centered around zero or reference coded in case of categorical covariates (similar to the intercept \(\beta_0\) in linear regression).

It can be seen from (10.3) that time is only incorporated via the baseline hazard (ignoring adaptations to time-varying models). Therefore, PH models estimate the baseline risk of an event at a given time, and modulate this risk according to the specification of covariates. This represents the eponymous ‘proportional hazards’ assumption as the individual’s hazard at time \(\tau\) is directly proportional to a multiplicative function of their own covariates: \(h(\tau|\mathbf{x}_i) \propto \exp(\eta_i)\). In other words, a unit change in a covariate acts multiplicatively on the estimated hazard. Further, the hazard ratio, which is a measure of the difference in risk, between two different subjects, depends solely on the value of their (linear) predictors and not on time. For a single covariate \(x\):

\[ \frac{h_{PH}(\tau|x_i)}{h_{PH}(\tau|x_j)} = \frac{h_0(\tau)\exp(x_i\beta)}{h_0(\tau)\exp(x_j\beta)} = \exp(\beta(x_i -x_j)) \]

Equivalently:

\[ h_{PH}(\tau|x_i) = \exp(\beta(x_i - x_j))h_{PH}(\tau|x_j) \tag{10.6}\]

So in the case where the covariate differs between subjects by \(1\), the hazard ratio increases multiplicatively by \(\exp(\beta)\). This yields an interpretable model, in which hazard ratios are constant over time and don’t depend on \(\tau\). That is, the covariates effect on the hazard is independent of time. Note that this doesn’t imply that the effect of covariates on the survival function is constant over time (a constant difference in hazards at each time point will accumulate over time and the difference between the survival functions will increase).

We next consider how to fit the \(\beta\) parameters semi-parametrically (Section 10.2.1) and fully parametrically (Section 10.2.2).

10.2.1 Semi-Parametric PH

The Cox Proportional Hazards (Cox PH) (Cox 1972), or Cox model, is likely the most widely known semi-parametric model and the most studied survival model (Reid 1994; Wang, Li, and Reddy 2019). Often, it is considered synonymous with proportional hazards and the functional form of the hazard given in (10.3). However, the main contribution of Cox’s work was to develop a method to estimate \(\boldsymbol{\beta}\) without making any assumptions about the baseline hazard. We derive the estimation of the parameters in some detail as the objective function of the Cox model is also used by many machine learning methods like boosting (Chapter 13) and neural networks (Chapter 14).

Recall from Section 3.5, to estimate the distribution of event times one either needs to make distributional assumptions and accordingly define the likelihood of observing the data (given model parameters), or to use non-parametric estimators, which usually do not incorporate covariate information. Let \(i_{(k)}\) denote the subject who experienced the event at ordered event time \(t_{(k)}\). Cox noted the contribution of an individual could be defined as the probability of a particular subject \(i_{(k)}\) experiencing the event at \(t_{(k)}\) given that someone in the risk set experienced the event at that time. The likelihood contribution of this \(k\)th event is given by

\[ \ell^{Cox}_{i_{(k)}} =\frac{h_0\left(t_{(k)}\right)\exp\left(\eta_{i_{(k)}}\right)} {\sum_{j\in \mathcal{R}_{t_{(k)}}} h_0\left(t_{(k)}\right)\exp\left(\eta_j\right)} =\frac{\exp\left(\eta_{i_{(k)}}\right)} {\sum_{j\in \mathcal{R}_{t_{(k)}}}\exp\left(\eta_j\right)}, \]

which depends on \(\boldsymbol{\beta}\) via \(\eta=\mathbf{x}^\top\boldsymbol{\beta}\). Note how the baseline hazard \(h_0\) cancels out in the likelihood contribution and thus doesn’t depend on time anymore. Thus, for the estimation of \(\boldsymbol{\beta}\), the baseline hazard can be considered a ‘nuissance parameter’ and the likelihood for the entire data set can be defined as:

\[ \mathcal{L}_{PL}(\boldsymbol{\beta}) = \prod_{k=1}^m \ell^{Cox}_{i_{(k)}} = \prod_{k=1}^m \left(\frac{\exp(\eta_{i_{(k)}})}{\sum_{j \in \mathcal{R}_{t_{(k)}}} \exp(\eta_j)}\right). \tag{10.7}\]

Information about the event times only contributes to (1) through the index of the product and sum, thus preserving rankings, i.e., the product is taken from first to last observed event time. The baseline hazard, and thus information about the exact event time is absent from the function. Moreover, censored observations only contribute in the denominator of the calculation. (1) is thereforer eferred to as a partial likelihood (Cox 1975) function, as it does not make use of all the observed data.

- also assumes that there are no ties in the event time, that is, no two subjects have an event at the same time. In practice, ties can be common and several methods have been proposed to handle them, namely an exact method (J. D. Kalbfleisch and Prentice 1973) (which is computationally expensive), the Breslow approximation (Breslow 1974), and the Efron approximation (Efron 1977); further details are not discussed here but all three methods are readily available in openly available software.

The log-partial likelihood, usually prefered for optimization, is given by \[ \mathcal{l}_{PL}(\boldsymbol{\beta}) = \sum_{k=1}^m \left(\eta_{i_{(k)}} - \log \left(\sum_{j \in \mathcal{R}_{t_{(k)}}} \exp(\eta_j)\right)\right), \tag{10.8}\] such that \[ \hat{\boldsymbol{\beta}} = \mathop{\mathrm{arg\,max}}_{\boldsymbol{\beta}}\ \mathcal{l}_{PL}(\boldsymbol{\beta}). \tag{10.9}\]

Traditionally, \(\hat{\boldsymbol{\beta}}\) is obtained using numerical optimization methods, such as Newton-Raphson or Fisher-Scoring, which require derivation the first and second deriviatives of 10.8.

Importantly, the partial likelihood allows us to estimate covariate effects (and interpret them in terms of hazard ratios) without making any assumptions about the underlying distribution of event times. Obtaining \(\hat{\boldsymbol{\beta}}\) also gives us enough information to make predictions in the form of relative risks (Chapter 5).

At this point, however, we don’t have an estimate of the baseline hazard \(h_0\) and thus cannot make survival distribution predictions. The Breslow estimator (Breslow 1972; Lin 2007) provides a way to obtain an estimate of the cumulative baseline hazard, \(H_0\), using the parameters from the Cox model: \[ H_{Bres}(\tau) = H_0(\tau) = \sum_{k:t_{(k)}\leq \tau} \frac{d_{t_{(k)}}}{\sum_{j \in \mathcal{R}_{t_{(k)}}} \exp(\eta_j)}. \tag{10.10}\] Note that if the value for all covariates or their effects was zero, or if there were no covariates, then the Breslow estimator is identical to the Nelson-Aalen estimator (Section 3.5.2.2):

\[ H_{Bres}(\tau) = \sum_{k:t_{(k)}\leq \tau} \frac{d_{t_{(k)}}}{\sum_{j \in \mathcal{R}_{t_{(k)}}} 1} = \sum_{t_{(k)}\leq \tau} \frac{d_{t_{(k)}}}{n_{t_{(k)}}} = H_{NA}(\tau). \]

With these formulae, the Cox PH model can be used as a predictive model by using training data to estimate \(\hat{\boldsymbol{\beta}}\) via (10.9). These fitted coefficients are used to predict \(\hat{\boldsymbol{\eta}}\) for new observations and finally the cumulative baseline hazard is computed with (10.10) to return a predicted distribution, for example the survival probability (10.5).

The Cox model is is highly interpretable and has a long history of usage in clinical prediction modelling and analysis. However, the proportional hazards assumption is often violated in real life, leaving the model to be a questionable choice when used for data analysis or inference. Over the years, extensions to the Cox model have been developed (Therneau and Grambsch 2001) to incorporate stratified baseline hazards (the PH assumption only has to hold within strata), time-varying effects (the effects of time-constant covariates change over time) and time-varying covariates (the values of covariates change over time). However, especially in case of time-varying covariates, it is difficult to make meaningful and interpretable predictions (as the values of covariates might not be known at the time of prediction). Whilst violation of the PH assumption can be problematic, especially for interpretation of covariate effects, it doesn’t appear to cause problems in terms of prediction accuracy. In fact, the Cox model often outperforms machine learning alternatives, including those that relax the PH assumption (Burk et al. 2024; Gensheimer and Narasimhan 2018; Luxhoj and Shyur 1997; Van Belle et al. 2011).

10.2.2 Parametric PH

Semi-parametric approaches (like the Cox model) are popular because they don’t make an assumption about the underlying distribution of event times, leaving the baseline hazard unspecified. However, there are some cases where modelling a particular distribution may make sense. On these occasions, a particular probability distribution of the event times is assumed, with three common choices (John D. Kalbfleisch and Prentice 2011; Wang, Li, and Reddy 2019) being the Exponential, Gompertz, and Weibull distributions. The Weibull distribution is particularly important as it reduces to the Exponential distribution when the shape parameter equals \(1\). Moreover, it is unique (and Exponential as a special case) in that it has both the PH and AFT (?sec-aft) property (technically a less known representation of Gompertz also has this property).

Assuming a PH model one can plug in the hazard and survival functions from the Weibull distribution into (10.3) and (10.5) respectively. First recall for a \(\operatorname{Weibull}(\gamma, \lambda)\) distribution with shape parameter \(\gamma\) and scale parameter \(\lambda\), the relevant functions can be given by (J. D. Kalbfleisch and Prentice 1973):

\[ h(\tau) = \lambda\gamma \tau^{\gamma-1} \]

and

\[ S(\tau) = \exp(-\lambda \tau^\gamma) \]

Taking these to be the baseline hazard and survival functions respectively, they can be substituted into the Cox model as follows: \[ h_{WeibullPH}(\tau|\mathbf{x}_i)= (\lambda\gamma \tau^{\gamma-1}) \exp(\eta_i) \tag{10.11}\] or equivalently \[ S_{WeibullPH}(\tau|\mathbf{x}_i)= (\exp(-\lambda \tau^\gamma))^{\exp(\eta_i)} \] Finally, these formulae can be used to define the full likelihood (Section 3.5.1) for the WeibullPH model (here for right-censored data):

\[ \begin{aligned} \mathcal{L}(\boldsymbol{\theta}) &= \prod_{i=1}^n h_Y(t_i|\mathbf{x}_i, \boldsymbol{\theta})^{\delta_i}S_Y(t_i|\mathbf{x}_i, \boldsymbol{\theta}) \\ &= \prod_{i=1}^n \Big((\lambda\gamma t_i^{\gamma-1} \exp(\eta_i))^{\delta_i}\Big)\Big(\exp(-\lambda t_i^\gamma\exp(\eta_i))\Big) \end{aligned} \]

with log-likelihood

\[ \begin{aligned} \mathcal{l}(\boldsymbol{\theta}) &= \sum_{i=1}^n \delta_i[\log(\lambda\gamma) + (\gamma-1)\log(t_i) + \eta_i] - \lambda t_i^\gamma\exp(\eta_i) \\ &\propto \sum_{i=1}^n \delta_i[\log(\lambda\gamma) + \gamma\log(t_i) + \eta_i] - \lambda t_i^\gamma \exp(\eta_i) \end{aligned} \]

Parameters can then be fit using maximum likelihood estimation (MLE) with respect to all unknown parameters \(\boldsymbol{\theta}= \{\boldsymbol{\beta}, \gamma, \lambda\}\). Expansion to other censoring types and truncation follows by using other likelihood forms presented in Section 3.5.

When considering which probability distributions to model in predictive experiments, Weibull is a common starting choice (Hielscher et al. 2010; R. and J. 1968; Rahman et al. 2017), its two parameters make it a flexible fit to data but on the other hand it can be easily reduced to Exponential when \(\gamma=1\). Gompertz (Gompertz 1825) is commonly used in medical domains, especially when describing adult lifespans. In a machine learning context, one can select the optimal distribution for future predictive performance by running a benchmark experiment. In contrast to the semi-parametric Cox model, fully parametric PH models can predict absolutely continuous survival distributions, they do not treat the baseline hazard as a nuisance, and in general will result in more precise and interpretable predictions if the distribution is correctly specified (Reid 1994; Royston and Parmar 2002).

10.2.3 Competing risks

There are two common methods to extend the Cox model to the competing risks setting. The first makes use of the cause-specific hazard to fit a cause-specific Cox model, the second fits a ‘subdistribution’ hazard.

Cause-specific PH models

In cause-specific models we define the hazard for cause \(e\) as:

\[ h_{e}(\tau|\mathbf{x}_{e;i})= h_{e;0}(\tau)\exp(\eta_{e;i}), \tag{10.12}\]

where \(h_{e;0}\) is a cause-specific baseline hazard and \(\mathbf{x}_{e;i}\) is a set of cause-specific covariates (although in practice often the same covariates are used for all causes), and \(\eta_{e;i}\) is the cause-specific linear predictor: \[ \eta_{e;i} = \mathbf{x}_{e;i}^T\boldsymbol{\beta}_e. \] In order to estimate \(\boldsymbol{\beta}_e\), let \(t_{e;(k)}, k=1,\ldots,m(e)\) be the unique, ordered event times at which events of cause \(e\) occur and let \(i_{e;(k)}\) be the index of the observation that experiences the \(k\)th event of cause \(e\). Then the cause-specific partial likelihood is given by: \[ \mathcal{L}_{PL}(\boldsymbol{\beta}_e) = \prod_{k=1}^{m(e)} \Bigg(\frac{\exp(\eta_{e;i_{e;(k)}})}{\sum_{j \in \mathcal{R}_{t_{e;(k)}}} \exp(\eta_{e;j})}\Bigg), \tag{10.13}\]

This is identical to the single-event partial likelihood in (1), with the only difference being that the product and sum are over the unique, ordered event times for cause \(e\). The risk-set definition is unaltered such that \(\mathcal{R}_{t_{e;(k)}}\) is the set of observations that have not experienced and event of any cause or censoring by \(t_{e;(k)}\).

Using the same logic, the Breslow estimator follows from (10.10): \[ H_{Bres;e}(\tau) = \sum_{k:t_{e;(k)}\leq \tau} \frac{d_{t_{e;(k)}}}{\sum_{j \in \mathcal{R}_{t_{e;(k)}}} \exp(\eta_{e;j})}, \tag{10.14}\] and the feature-dependent, cause-specific cumulative hazard (10.4) as

\[ H_{e}(\tau|\mathbf{x}) = H_{Bres;e}(\tau)\exp(\mathbf{x}^T\boldsymbol{\beta}_e) \tag{10.15}\]

Finally, in order to obtain an estimate of the cumulative incidence function \(F_e(\tau)\) (4.9), we need an estimate of the all cause survival probability and an estimate of the cause-specific hazard (which is not directly available in the Cox modelas the Breslow estimator only provides an estimate of the cause-specific cumulative baseline hazard). The all cause survival probability is obtained using 10.15 and the usual relationships (4.7, 3.4) as \[ S(\tau|\mathbf{x}) = \exp\left(-\sum_{e=1}^q H_{e}(\tau|\mathbf{x})\right) \tag{10.16}\] and an estimate of the cause-specific hazard is obtained via \[ \begin{aligned} h_{e}(\tau|\mathbf{x}) = \Delta H_{e}(\tau|\mathbf{x}) & = \Delta H_{Bres;e}(\tau)\exp(\mathbf{x}^T\boldsymbol{\beta}_e)\\ & = \frac{d_{t_{e;(k)}}}{\sum_{j \in \mathcal{R}_{t_{e;(k)}}} \exp(\eta_{e;j})}\exp(\mathbf{x}^T\boldsymbol{\beta}_e), \end{aligned} \tag{10.17}\] where \(\Delta H_{Bres;e}(\tau)\) is the increment of the cause-specific cumulative baseline hazard between successive time points (note the missing sum over different time points compared to 10.14). With 10.16 and 10.17, the CIF is approximated by \[ F_e(\tau|\mathbf{x}) = \sum_{k:t_{(k)}\leq \tau} S(\tau|\mathbf{x}) h_{e}(\tau|\mathbf{x}). \tag{10.18}\]

Subdistribution PH models

The methods discussed thus far estimate cause-specific hazards (4.4), which represent the instantaneous risk of an individual experiencing the cause of interest, given that they have not yet experienced any event. An alternative approach is to model subdistribution hazards, which model the risk of an individual experiencing the cause of interest, given they have not yet experienced the event of interest, but may have experienced a competing event. As will be shown below, the benefit of the subdistribution model is the ability to directly predict the cumulative incidence function (CIF) under a PH model, rather than using the indirect calculation in Equation (10.18). The subdistribution hazard approach also provides a direct relationship between covariates and the CIF for an event of interest (Austin, Lee, and Fine 2016).

Mathematically, the difference between cause-specific and subdistribution hazards comes from the definition of the risk set. The subdistribution risk set is defined as: \[ \mathcal{R}_{e;\tau} := \{i: t_i \geq \tau \vee [t_i < \tau \wedge e_i \neq e \wedge \delta_i = 1]\} \tag{10.19}\] Observe that in this definition the left-hand side is the same as the standard risk set definition (3.10) and the right-hand side additionally includes those that have experienced a different event already. Anyone that has been censored (\(e = e_0\)) before \(\tau\) is removed from the risk set.

The definition of the subdistribution risk (10.19) is equivalent to defining the subdistribution hazard for cause \(e\) as: \[ h^{SD}_{e}(\tau) = \lim_{\mathrm{d}\tau\to 0} \frac{P(\tau \leq Y \leq \tau + \mathrm{d}\tau, E = e\ |\ Y \geq \tau \vee (E \neq e \wedge \Delta=1))}{\mathrm{d}\tau}. \tag{10.20}\] Fine and Gray (Fine and Gray 1999) proposed a proportional hazards formulation of the subdistribution hazard (10.20) as \[ h_{FG;e}(\tau|\mathbf{x}_i)= h^{SD}_{e;0}(\tau)\exp(\eta_i), \tag{10.21}\] with subdistribution baseline hazard \(h^{SD}_{e;0}(\tau)\) (we add the superscript here in order to make explicit that this baseline hazard will differ from the one obtained from the Cox model (10.4) due to differences in risk set definition).

While the subdistribution hazards model is different from the proportional hazards Cox model, Fine and Gray (1999) showed that it’s parameters could be estimated using a weighted partial likelihood, which is otherwise identical to the cause-specific partial likelihood (10.13): \[ \mathcal{L}_{PL}^{SD}(\boldsymbol{\beta}) = \prod_{k=1}^m \left(\frac{\exp(\eta_{e;i_{e;(k)}})}{\sum_{j \in \mathcal{R}_{e;t_{e;(k)}}} w_{kj}\exp(\eta_{e;j})}\right), \tag{10.22}\] In (10.22), \(t_{e;(k)}\) is the same as in the cause-specific case and weights \(w_{kj}\) account for the fact that the subdistribution risk set is different from the cause-specific risk set. The weights are defined as \[ w_{kj} := \frac{G_{KM}(t_{e;(k)})}{G_{KM}(\min\{t_{e;(k)}, t_j\})}, \] where \(G_{KM}\) is the Kaplan-Meier estimator fit on the censoring distribution (Section 3.5.2.1). Because of the way the subdistribution risk set is defined in (10.19), the denominator of (10.22) is a weighted sum over individuals, \(j \in \mathcal{R}_{e;t_{e;(k)}}\), at time \(t_{e;(k)}\) who have either yet to experience an event (\(t_j \geq t_{e;(k)}\)) or experienced a different event (\(t_j < t_{e;(k)}\wedge e_i \ne e \wedge \delta_i=1\)). The weighting function handles these cases as follows:

- If \(t_j \geq t_{e;(k)}\) then \(w_{kj} = 1\) and thus observations that have not experienced any event contribute fully to the denominator.

- If \(t_j < t_{e;(k)}\) then \(w_{kj} < 1\) as \(G_{KM}\) is a monotonically decreasing function and \(w_{kj}\) continues to decrease as the distance between \(t_j\) and \(t_{e;(k)}\) increases. Thus the contribution from observations that have experienced competing events reduces over time.

Whilst modelling the subdistribution can seem unintuitive, note that if there is only one event of interest then \(w_{kj} = 1\) for all \(k\) and \(j\) and further \(e_i \neq e\) must always be false, meaning (10.19) reduces to the standard risk set definition (3.10) as only the left condition can ever be true and by the same logic the subdistribution hazard reduces to the usual hazard definition. Therefore the standard Cox PH for single events is perfectly recovered.

Instead of interpreting the subdistribution hazards directly, Austin and Fine (2017) recommend interpreting the fitted coefficients via the cause-specific CIF and cumulative hazard forms of (10.21), which can be obtained in the ‘usual’ way by first integrating to obtain the cumulative hazard form:

\[ H_{FG;e}(\tau|\mathbf{x}_i)= H^{SD}_{e;0}(\tau)\exp(\eta_i) \tag{10.23}\]

where \(H^{SD}_{e;0}\) is the cause-specific baseline cumulative hazard for cause \(e\). Then using (3.4) to relate the survival and hazard functions and representing this in terms of the CIF:

\[ F_{FG;e}(\tau|\mathbf{x}_i) = 1 - \exp(-H^{SD}_{e;0}(\tau))^{\exp(\eta_i)} \tag{10.24}\]

Or more simply:

\[ F_{FG;e}(\tau|\mathbf{x}_i) = 1 - (1 - F^{SD}_{e;0}(\tau))^{\exp(\eta_i)} \tag{10.25}\]

where \(F^{SD}_{e;0}\) is the cause-specific baseline cumulative incidence function for cause \(e\). The model in (10.25) is fit by estimating the baseline cumulative hazard function and substituting into (10.24). Similarly to how the subdistribution hazard was created, estimation of \(\hat{H}^{SD}\) follows by updating (10.10) to use the subdistribution risk set definition and applying the same weighting to compensate for multiple events:

\[ \hat{H}^{SD}_{Bres;e}(\tau) = \sum_{k:t_{e;(k)}\leq \tau} \frac{d_{t_{e;(k)}}}{\sum_{j \in \mathcal{R}_{e;t_{e;(k)}}} w_{kj}\exp(\hat{\eta}_j)} \tag{10.26}\]

Use of the Fine-Gray model has to be carefully considered before model fitting. The subdistribution risk set definition, which includes competing events, treats all causes as non-terminal (even if realistically impossible), which means an observation could be considered at risk of the event of interest even after experiencing a terminal event (like death). Moreover, combining subdistribution CIF estimates across causes can result in probabilities that exceed \(1\), which should be impossible as events are mutually exclusive and exhaustive (Austin et al. 2022). The cause-specific hazards approach for the estimation of the CIF (Section 4.2.1 and Equations 10.16, 10.18) is often preferred (Austin et al. 2022) as fitting subdistribution models requires model assumptions to hold for all causes simultaneously, which is not possible (Bonneville, de Wreede, and Putter (2024)).

The Fine-Gray model is most appropriate when one is only interested in analyzing one of the causes. In this case, interpretation of coefficients from a subdistribution model can be more intuitive as they represent the magnitude of the effect on the incidence, rather than the hazard, which is often of more interest, for example in clinical settings (Austin and Fine 2017).

10.3 Accelerated Failure Time

Whilst the proportional hazards models is a powerful model, it often does not represent real-world phenomena well. The accelerated failure time (AFT) model is a popular alternative which models the effect of covariates as ‘acceleration factors’ that act multiplicatively on time. In contrast to the PH model, AFT models are all fully-parametric. A semi-parametric model has been suggested (Buckley and James 1979) however this is not widely used as it lacks ‘theoretical justification’ and is ‘not reliable’ (Wei 1992). Similarly, whilst its theoretically possible to fit cause-specific AFT models for the competing risks setting, this does not appear common in practice.

10.3.1 Understanding acceleration

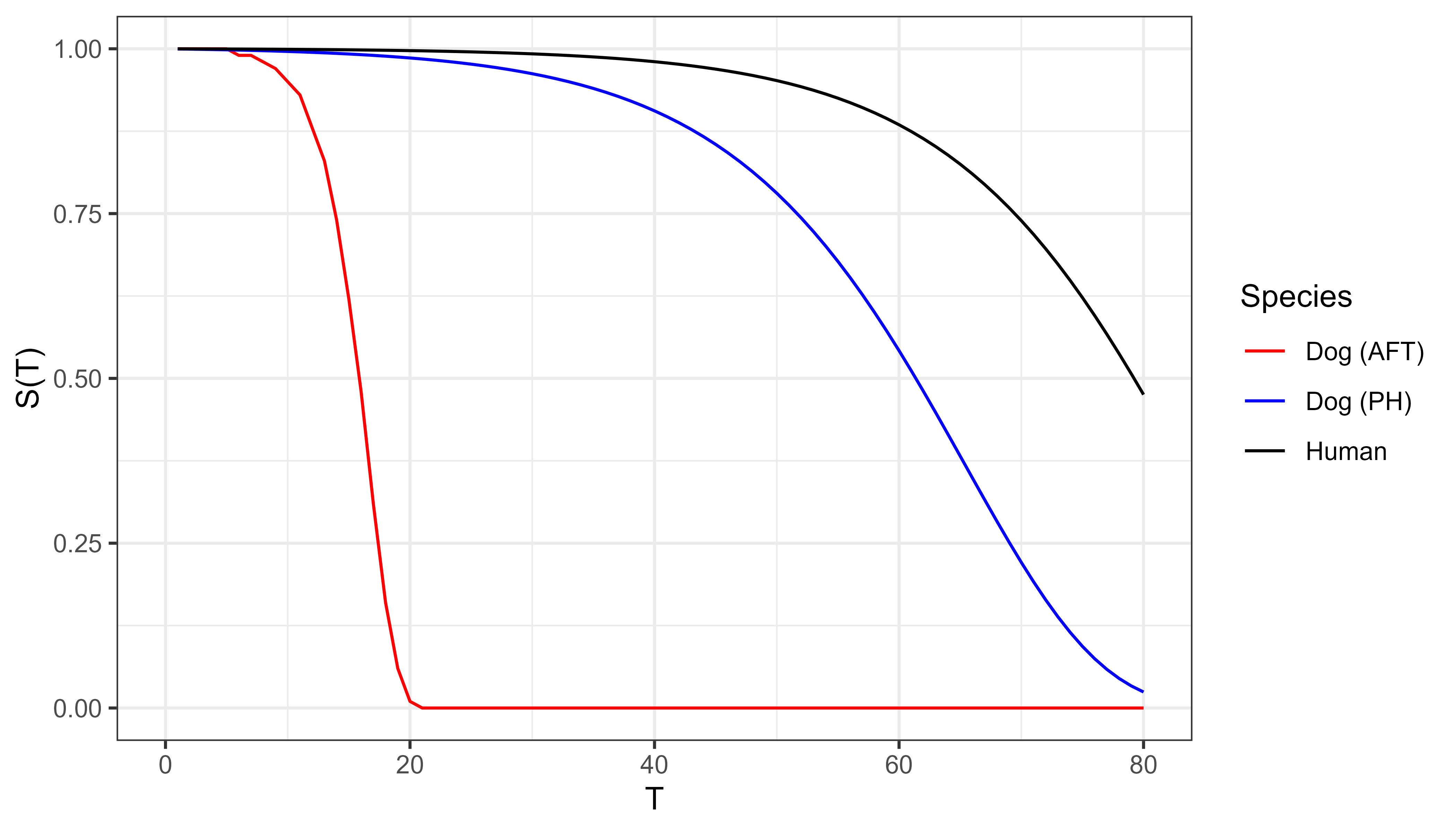

Moving from a PH to AFT framework can be confusing, so to elucidate this, take the following example adapted from Kleinbaum and Klein (1996). Consider the lifespans of humans and small dogs. In this example we are taking species to be a modifier and we’re looking at what survival would look like under AFT (time scaling) versus PH (hazard scaling).

Suppose small dogs age five times faster than humans. Under AFT (time-scaling modifier), a dog’s survival at age \(\tau\) matches a human’s at \(5\tau\):

\[ S_{dog}(\tau) = S_{human}(5\tau) \]

For example, at age 10, a small dog has the same probability of being alive as a human at age 50. The dog’s survival curve is accelerated by a factor of 5, as shown in the bottom, red curve in Figure 10.2.

If instead, one assumes a constant hazard ratio then at age \(\tau\), the dog’s risk is five times the humans (10.5):

\[ S_{dog}(\tau) = S_{human}(\tau)^5 \]

the middle, blue curve in Figure 10.2.

These illustrative curves demonstrate how the same modifier yields different behaviour under PH and AFT assumptions.

More generally, the accelerated failure time model estimates survival functions as

\[ S_{AFT}(\tau|\mathbf{x}_i) = S_0(\tau e^{-\eta_i}) \tag{10.27}\]

with respective hazard function

\[ h_{AFT}(\tau|\mathbf{x}_i) = e^{-\eta_i} h_0(\tau e^{-\eta_i}) \tag{10.28}\]

Note three key differences compared to the PH model. Firstly, \(\exp(-\eta_i)\) is modelled instead of \(\exp(\eta_i)\), hence in a PH model \(h_{PH}(\eta_i+1) > h_{PH}(\eta_i)\) whereas in an AFT model \(h_{AFT}(\eta_i+1) < h_{AFT}(\eta_i)\). Secondly, the baseline risk now clearly depends on both time and the linear predictor. Thirdly, an increase in a covariate results in a multiplicative increase over time compared to the PH model in which the hazard is increased – this is often seen as more intuitive to understand than hazard ratio, especially to clinicians who may be more interested in survival times and not abstract relative risks.

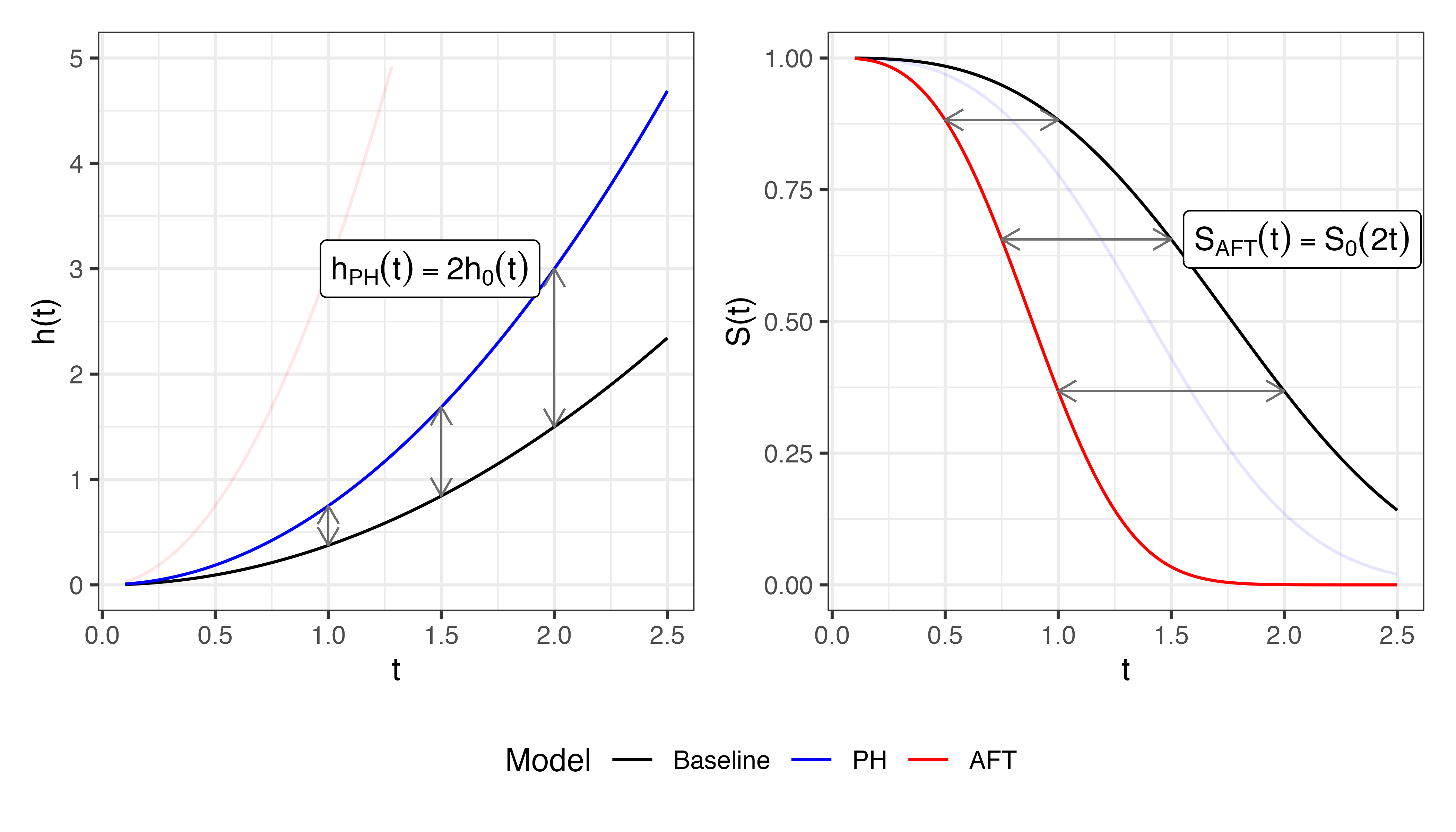

This third point is visualised in Figure 10.3 in which a covariate is increased by \(\log(2)\). The left panel shows that the estimated hazard function from a PH model is double the baseline at all time points – the multiplicative effect is seen on the y-axis (risk). In contrast, the right panel shows how the survival function from an AFT model decreases at double the speed to the baseline – the multiplicative effects is now on the x-axis (time). Another way to demonstrate this effect is through the log-linear form of the accelerated failure time model:

\[ \log(t_i) = \mu + \eta_i + \sigma\epsilon_i \tag{10.29}\]

where \(\sigma\) is a scale parameter, \(\epsilon_i\) is an error term, and \(\mu\) is an intercept. Now consider the difference in \(t_i\) when \(\eta_i\) is increased by one (assuming just one covariate):

\[ \log(t_i|x_i + 1) - \log(t_i|x_i) = (\mu + \beta (x_i + 1) + \sigma\epsilon_i) - (\mu + \beta x_i + \sigma\epsilon_i) = \beta \]

Taking exponentials

\[ \frac{t_i|x_i + 1}{t_i|x_i} = \exp(\beta) \]

Hence increasing a covariate effectively multiplies the survival time by \(\exp(\beta)\):

\[ t_i|x_i + 1 = e^{\beta}t_i | x_i \]

10.3.2 Parametric AFTs

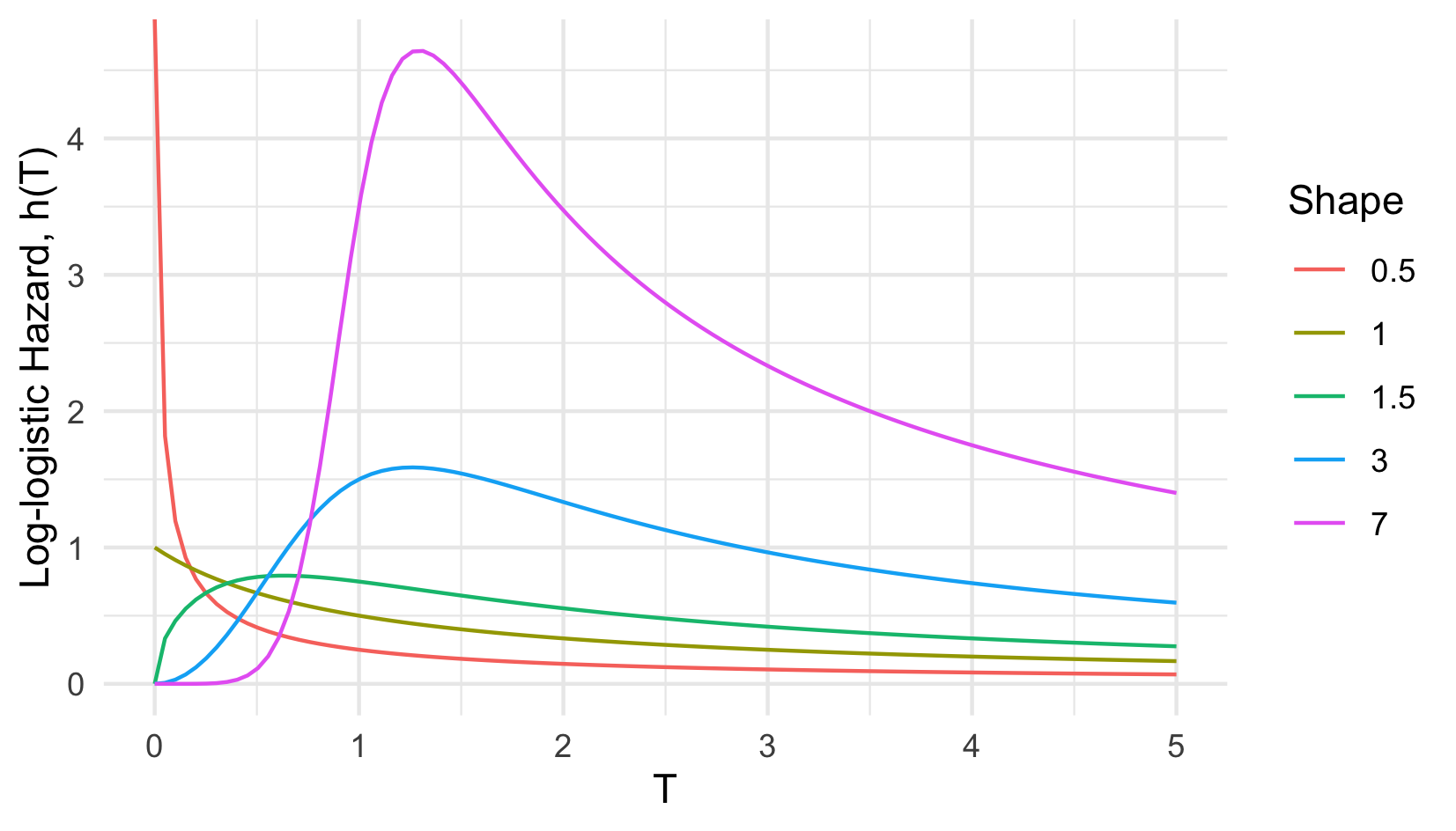

As stated, AFTs are usually fully parametric, which means \(S_0\) and \(h_0\) are chosen according to some specific distribution. Common distribution choices include Weibull, Exponential, Log-logistic, and Log-Normal (John D. Kalbfleisch and Prentice 2011; Wang, Li, and Reddy 2019). The hazard function of the log-logistic distribution is plotted in Figure 10.4, note the hazard is non-monotonic, allowing non-PH representations to be modelled where the risk of an event may increase before decreasing, or vice versa. When distributions are well-specified and the PH assumption is violated, AFTs can outperform PH alternatives (Patel, Kay, and Rowell 2006; Qi 2009; Zare et al. 2015).

As with the PH model, AFT models can be fit using maximum likelihood estimation of the full-likelihood by plugging in distribution defining functions into (10.27) and (10.27) and likelihoods defined in (Section 3.5.1). Using Exponential this time as an example (the maths is a bit more friendly), first recall that if \(X \sim \operatorname{Exp}(\lambda)\) then \(h_X(\tau) = \lambda\) and \(S_X(\tau) = \exp(-\lambda\tau)\). Then:

\[ h_{ExpAFT}(\tau|\mathbf{x}_i)= \lambda e^{-\eta_i} \]

and

\[ S_{ExpAFT}(\tau|\mathbf{x}_i)= \exp(-\lambda\tau e^{-\eta_i}) \]

Giving the ExpAFT likelihood (Section 3.5.1):

\[ \begin{aligned} \mathcal{L}(\boldsymbol{\theta}) &= \prod_{i=1}^n h_Y(t_i|\boldsymbol{\theta})^{\delta_i}S_Y(t_i|\boldsymbol{\theta}) \\ &= \prod_{i=1}^n \Big(\lambda e^{-\eta_i})^{\delta_i}\Big(\exp(-\lambda t_i e^{-\eta_i})\Big) \\ &= \prod_{i=1}^n \lambda^{\delta_i} \exp(-\lambda t_i e^{-\eta_i} - \delta_i\eta_i) \\ \end{aligned} \]

with log-likelihood

\[ \begin{aligned} \mathcal{l}(\boldsymbol{\theta}) &= \sum_{i=1}^n \log(\lambda^{\delta_i} \exp(-\lambda t_i e^{-\eta_i} - \delta_i\eta_i)) \\ &= \sum_{i=1}^n \delta_i\log(\lambda) - \lambda t_i e^{-\eta_i} - \delta_i\eta_i \\ \end{aligned} \]

Likelihoods can also be derived using the log-linear form in (10.29) however these are beyond the scope of this book. As before, extensions to other censoring types and truncation follows by specifying the correct likelihood form from Section 3.5.1.

10.4 Proportional Odds

Proportional odds models (Bennett 1983) are the final of the three major linear model classes in survival analysis. In contrast to the PH and AFT models just discussed, proportional models are rarely, if ever, used on their own to make inferences about underlying data or as predictive models. Instead they are more commonly found as components within neural networks (Chapter 14) or in flexible parametric models (discussed next). Therefore this section very briefly describes the motivation for the model and its key properties.

As the name may suggest, the proportional odds model is analogous to the proportional hazards model except with the goal of modelling odds instead of hazards. For a given time \(\tau\), the odds of an event happening at at \(\tau\) are

\[ O(\tau) = \frac{p(\tau)}{1 - p(\tau)} \]

Where \(p(\tau)\) is the probability of the event happening at \(\tau\). Of course as has been seen throughout this book the general interest is centered around the survival probability and therefore a more relevant quantity is the odds of the event not happening before \(\tau\):

\[ O(\tau) = \frac{S(\tau)}{1-S(\tau)} = \frac{S(\tau)}{F(\tau)} \]

By considering the same functional form as in the proportional hazards model, the proportional odds model follows analogously, subsituting odds in place of the hazards:

\[ O_{PO}(\tau|\mathbf{x}_i) = O_0(\tau)\exp(\eta_i) \]

where \(O_0\) is the baseline odds.

By the same logic as the proportional hazards model, this model assumes \(O(\tau|\mathbf{x}_i) \propto \exp(\eta_i)\) and that a unit increase in a covariate multiplies the odds of surviving past \(t\) by \(\exp(\eta_i)\). A useful implication is the convergence of hazard functions, which states \(h_i(\tau)/h_0(\tau) \rightarrow 1\) as \(\tau \rightarrow \infty\) (Kirmani and Gupta 2001). To see this note that the PO model can be represented in terms of the hazard function via (Collett 2014)

\[ h_{PO}(\tau|\mathbf{x}_i) = h_0(\tau)\Bigg(1 - \frac{S_0(\tau)}{(\exp(\eta_i) - 1)^{-1} + S_0(\tau)} \Bigg) \tag{10.30}\]

Dividing by the baseline hazard yields

\[ \frac{h_{PO}(\tau|\mathbf{x}_i)}{h_0(\tau)} = \Bigg(1 - \frac{S_0(\tau)}{(\exp(\eta_i) - 1)^{-1} + S_0(\tau)} \Bigg) = \frac{(\exp(\eta_i) - 1)^{-1} }{(\exp(\eta_i) - 1)^{-1} + S_0(\tau)} \]

Simplifying gives \((1 + S_0(\tau)(\exp(\eta_i) - 1))^{-1}\). As \(S_0(\tau) \rightarrow 0\) when \(\tau \rightarrow \infty\), it follows that the hazard ratio tends to \(1\) as \(\tau\) increases.

This means that for any individual, their individual hazard approaches the baseline hazard over a long period of time. This assumption holds in several contexts, commonly in medical domains in which death is the event of interest. For example, take two patients with a disease, patient \(i\) receives treatment and patient \(j\) does not. Let \(\exp(\eta_i) = 4\), \(\exp(\eta_j) = 1\), \(S_0(0) = 1\), and \(S_0(5) = 0.01\). Following (10.30), we have for patient \(i\)

\[ h_{PO}(0|\mathbf{x}_i) = 0.25h_0(0); \quad\quad h_{PO}(\tau|\mathbf{x}_i) = 0.97h_0(5) \]

and for patient \(j\)

\[ h_{PO}(0|\mathbf{x}_j) = h_0(0); \quad\quad h_{PO}(5|\mathbf{x}_j) = h_0(5) \]

The treatment effect reduces the hazard for observation \(i\) at early time-points. However, at the later time-point, the hazard is very similar for both observations.

There is no simple closed form expression for the partial likelihood of a semi-parametric proportional odds model and hence in practice a Log-logistic distribution is usually assumed for the baseline odds and the model is fit by maximum likelihood estimation on the full likelihood (Bennett 1983), discussed further in the next section.

10.5 Flexible Parametric Models

Royston-Parmar flexible parametric models (Royston and Parmar 2002) extend PH and PO models by estimating the baseline hazard with natural cubic splines. The model was designed to keep the form of the PH or PO methods but without being forced to estimate a misleading baseline hazard (for semi-parametric models) or missspecifying the survival distribution (for fully-parametric models). This is achieved by fitting natural cubic splines in place of the baseline hazard.

The crux of the method is to use splines to model time on a log-scale and to either estimate the log cumulative Hazard for PH models,or the log Odds for PO models. For the flexible PH model, a Weibull distribution is the basis for the baseline distribution, whereas a Log-logistic distribution is assumed for the baseline odds in the flexible PO model. The exact derivation of the model requires a lot of mathematical exposition which is not included here, a very good summary of the model is given in Collett (2014). Instead, below is the model in its full form with an explanation of the variables and figures that demonstrate cubic splines.

The flexible parametric Royston-Parmar (RP) proportional hazards and proportional odds model are respectively defined by

\[ \log{H}_{RP}(\tau|\mathbf{x}_i) = s(\tau|\boldsymbol{\gamma},\boldsymbol{k}) + \eta_i \tag{10.31}\]

\[ \log{O}_{RP}(\tau|\mathbf{x}_i) = s(\tau|\boldsymbol{\gamma},\boldsymbol{k}) + \eta_i \tag{10.32}\]

where \(\boldsymbol{\gamma}\) are spline coefficients to be estimated by maximum likelihood estimation, \(\boldsymbol{k}\) are the positions of \(K\) knots, and \(s\) is the restricted cubic spline function in log time defined by

\[ s(\tau|\boldsymbol{\gamma},\boldsymbol{k}) = \gamma_0 + \gamma_1\log(\tau) + \gamma_2\nu_1(\log(\tau)) + ... + \gamma_{K}\nu_K(\log(\tau)) \]

\(\nu_j\) is the basis function at knot \(k_j\) defined by

\[ \nu_j(x) = (x - k_j)^3_+ - \lambda_j(x - k_{min})^3_+ - (1 - \lambda_j)(x - k_{max})^3_+ \]

where \[ \lambda_j = \frac{k_{max} - k_j}{k_{max} - k_{min}} \]

and \((x - y)_+ = \max\{0, (x - y)\}\) for any \(x, y\), and where \(k_{min}\) and \(k_{max}\) are the boundaries of the cubic spline, meaning the curve is linear when \(\log(t) < k_{min}\) or \(\log(t) > k_{max}\).

To see how the proportional hazards RP model relates to the Weibull distribution, first we integrate and take logs of the Weibull-PH hazard function from equation (10.11):

\[ \log(H_{WeibullPH}(\tau|\mathbf{x}_i)) = \log\big((\lambda \tau^\gamma) \exp(\eta_i)\big) = \log(\lambda) + \gamma\log(\tau) + \eta_i \]

Setting \(\gamma_0 = \log(\lambda)\) and \(\gamma_1 = \gamma\) yields (10.31) when there are no knots. Analogous results can be shown between (10.32) and the Log-logistic distribution.

To fit the model, the number and position of knots are theoretically tunable, although Royston and Parmar advised against tuning and suggest often only one internal knot is required and the placement of the knot does not make a significant difference to performance (Royston and Parmar 2002). Increasing knots can increase model overfitting however Bower et al. (2019) showed up to seven knots does not significantly increase model bias. The model’s primary advantage is it’s flexibility to model non-linear effects and can also be extended to time-dependent covariates. Moreover, the model can be fit via maximum likelihood estimation and thus many standard off-shelf routines for estimating a smooth survival time function. Despite advantages, the model appears to be limited in common use which makes it difficult to verify the model’s utility across different contexts (Ng et al. 2018).

In the same manner as other proportional hazards models, the Royston-Parmar model can be extended to competing risks by modelling cause-specific hazards, considering only one event at a time and censoring competing events (Hinchliffe and Lambert 2013).

10.6 Improving traditional models

A number of model-agnostic algorithms have been created to improve a model’s predictive ability. When applied to traditional algorithms, these methods can be used to create powerful models that outperform other machine learning. As each could be the subject of a whole book, this section remains brief and just covers the general overview. These are split into methods for:

- modelling non-linear data effects;

- reducing the number of variables in a dataset; and

- combining predictions from multiple models.

10.6.1 Non-linear effects

One of the major limitations of the models discussed so far is the assumption of a linear relationship between covariates and outcomes (on the scale of the predictor). At first one might view the Cox model as non-linear due to the presence of the exponential function. However, the linearity becomes clear when the model is equivalently expressed as:

\[ \log\left(\frac{h(\tau|\mathbf{x}_i)}{h_0(\tau)}\right) = x_1\beta_1 + ... x_p\beta_p \]

Consider modelling the time until disease progression with covariates age (continuous) and treatment (binary):

\[ \log\left(\frac{h(\tau|\mathbf{x}_i)}{h_0(\tau)}\right) = x_{age_i}\beta_{age} + x_{trt_i}\beta_{trt} \]

In this form, increasing age from \(1\) to \(21\) or \(81\) to \(101\) has the same effect on the log hazard ratio; this is clearly not realistic. There are many approaches to relaxing linearity; these are discussed extensively elsewhere and not repeated here, we recommend (James et al. 2013) which covers non-linear modelling in detail.

In brief, PH and AFT can be extended to generalised additive models (GAM) in the ‘usual’ way. For example for the Cox model,

\[ \log\left(\frac{h_i(\tau|\mathbf{x}_i)}{h_0(\tau)}\right) = f_1(x_1) + ... + f_p(x_p) \]

where each \(f_j\) is a smooth, possibly non-linear function of its covariate. If all \(f_j\) are the identity function then this reduces to the standard Cox model. The functions \(f_j\) are often chosen to be natural splines, but step functions, polynomial bases, or any other transformation can also be used. The Royston-Parmar model (Section 10.5) is a special case of a GAM where splines are used to model the baseline hazard.

10.6.2 Dimension reduction and feature selection

In a predictive modelling problem, only a small subset of variables in datasets tend to be relevant for correctly predicting the outcome. Other variables are either redundant – they provide no more information than their counterparts – or irrelevant – they do not influence the outcome. In these cases, using all variables for modelling results in worse interpretability, increased computational complexity, and often inferior model performance as model’s tend to overfit the training data and generalise poorly to new data.

To improve model performance, one can therefore ‘help’ models by applying feature selection methods to reduce the number of variables in the dataset. Feature selection is often grouped into three categories: 1) filters; 2) wrappers; and 3) embedded methods. Wrappers fit multiple models on subsets of variables and select the best performing subsets, this is often computationally infeasible in the context of very large datasets, and as such have less relevance in survival analysis which often tackles very high-dimensional datasets such as genomic datasets and detailed time-series economic data. This section only looks at methods specific to survival analysis and does not consider general algorithms such as PCA, see further reading below for suggested additional material that covers these areas.

Embedded methods

Embedded methods refers to those that are incorporated during model fitting. The vast majority of machine learning models incorporate embedded methods and thus reduce a dataset’s size as part of the training process. In contrast, models that do not apply any form of feature selection will perform poorly when there is a large number of variables in a dataset. One model that straddles the line of ‘traditional’ and ‘machine learning’ methodology is the elastic Cox model, which incorporates the ‘lasso’ and ‘ridge’ regularization methods. Given a generic learning algorithm, lasso and ridge regularization constrain the model coefficients subject to the \(\cal{l}^1\)-norm and \(\cal{l}^2\)-norm respectively. The Lasso-Cox (Tibshirani 1997) model fits the Cox model by estimating \(\boldsymbol{\beta}\) as

\[ \hat{\boldsymbol{\beta}} = \mathop{\mathrm{arg\,max}}\mathcal{L}(\boldsymbol{\beta}); \text{ subject to } \sum |\beta_j| \leq \gamma \]

where \(\mathcal{L}\) is the likelihood defined in (1) and \(\gamma > 0\) is a hyper-parameter.

In contrast, the Ridge-Cox model estimates \(\boldsymbol{\beta}\) as

\[ \hat{\boldsymbol{\beta}} = \mathop{\mathrm{arg\,max}}\ \mathcal{L}(\boldsymbol{\beta}); \text{ subject to } \sum \beta_j^2 \leq \gamma \]

Ridge and lasso are both shrinkage methods, which are used to reduce model variance and overfitting, especially in the context of multi-collinearity. However, the \(\mathcal{l}^1\) norm in Lasso regression can also shrink coefficients to zero and thus performs variable selection as well. It is therefore possible to incorporate a feature selection method first and then pass the results to a Ridge-Cox model – though experiments have shown Ridge-Cox on its own is already a powerful tool (Spooner et al. 2020). Alternatively, as with many machine learning algorithms, deciding between lasso and ridge can be performed in empirical benchmark experiments by using elastic net (Simon et al. 2011; Zou and Hastie 2005), which is a convex combination of \(\mathcal{l}^1\) and \(\mathcal{l}^2\) penalties such that \(\boldsymbol{\beta}\) is estimated as

\[ \hat{\boldsymbol{\beta}} = \mathop{\mathrm{arg\,max}}\mathcal{L}(\boldsymbol{\beta}); \text{ subject to } \alpha \sum |\beta_j| + (1-\alpha) \sum \beta_j^2 \leq \gamma \]

where \(\alpha\) is a hyper-parameter to be tuned.

Filter methods

Filter methods are a two-step process that score features according to some metric and then select either a given number of top-performing features (i.e., have the best score) or those where the score exceeds some threshold. Once again determining the number of features to select, or the threshold to exceed, can be performed via hyperparameter optimisation. Bommert et al. (2021) compared 14 filter methods for high-dimensional survival data by extending existing methods, making use of tools seen throughout this book including non-parametric estimators, martingale residuals, and inverse probability of censoring weighting. However, the authors found that the method that outperformed all others was a simple variance filter:

\[ V(\mathbf{x}_{;j}) = \text{Var}(\mathbf{x}_{;j}) \]

where \(\mathbf{x}_{;j}\) is the \(j\)th variable in the data and \(V\) is the resulting score. The filter measures the amount of variance in the feature and removes features that have little variation.

Another common filter method is to train another model and make use of its embedded feature selection and pass these results to a simpler model. This allows a traditional model to be trained on relevant features without loss to interpretability or performance. Common choices for models to use in the first step of the pipeline include random forests (Chapter 11) and gradient boosting machines (Chapter 13).

10.6.3 Ensemble methods

Ensemble methods fit multiple models and combine the result into a single prediction. Common ensemble methods include boosting, bagging, and stacking. These are briefly explained below and can be applied to any model, whether machine learning or a simple linear model. Note that nested cross-validation should be used when fitting any of the models below in order to ensure no data is ‘leaked’ between training and predictions (Section 2.5).

Bagging and averaging

The simplest ensemble method is to fit multiple models on the same data and make predictions by taking an average over the individual model predictions. The average over predictions could be uniform, weighted with weights selected through expert knowledge, or weighted with weights optimised as hyper-parameters. Ensemble methods perform best when there is high variance between models as each then captures unique information about the underlying data. Therefore ensemble averaging is more common after first subsetting the training data and training each model on a different subset.

Whilst increasing variance is beneficial, too much variance may result in worse predictions. Hence, bagging (Bootstrap AGGregatING) is a common approach to increase variance without losing predictive accuracy. Bootstrapping is the process of sampling data with replacement, meaning the rows may be duplicated in each subset – this is discussed further in Chapter 11. After sampling the process is the same with predictions made and averaged.

Model-based boosting

Model-based boosting fits a sequence of models that iteratively improve model performance by correcting the previous model’s mistakes. The initial model is trained as usual on a testing dataset, and subsequent models are fit on the same features but using ‘pseudo-residuals’ as targets (a regression problem). These pseudo-residuals are the negative gradient of a chosen loss from the previous iteration – this is discussed in detail in Chapter 13. The result is a gradual increase in improvement as each model captures patterns missed previously. In a survival analysis context, many of the losses discussed in Part II can be used in this pipeline, with the choice of loss dependent on the task of interest. Model-based boosting is a generic pipeline which may underperform the purpose-built algorithms discussed in Chapter 13.

Stacking

Stacking can improve model performance by fitting multiple models and aggregating the predictions using a meta-model. Fitting a meta-model, often a simple linear model, results in fitted coefficients that weight the input models according to how close or far they were from the truth.

Training data is partitioned (once or several times) and the first partition is used to train several independent models, a meta-model is fit using the outputs (predictions) from the base models as features and the targets \((t_i,\delta_i)\) from the second partition as targets.

As an example, take stacking three Cox models with a Cox meta-model. Let \(\mathcal{D}= \{(\mathbf{x}_i, t_i, \delta_i)\}\) be a dataset. Partition \(\mathcal{D}\) into two disjoint datasets \(\mathcal{D}_1\) of size \(n\) and \(\mathcal{D}_2\) of size \(m\). Three Cox base models are fit on \(\mathcal{D}_1\) and linear predictors are obtained (predicted) for all \(m\) observations in \(\mathcal{D}_2\):

\[ Z = \begin{bmatrix} \hat{\eta}^{(1)}_{1} & \cdots & \hat{\eta}^{(3)}_{1} \\ \vdots & \ddots & \vdots \\ \hat{\eta}^{(1)}_{m} & \cdots & \hat{\eta}^{(3)}_{m} \\ \end{bmatrix} \]

where \(\hat{\eta}^{(j)}_{i}\) is the linear predictor for the \(i\)th observation in the validation set from the \(j\)th base model.

Fit the meta-Cox model on \((Z, (t_i, \delta_i)_{i \in \mathcal{D}_2})\):

\[ h_M(\tau|\hat{\boldsymbol{\eta}}_i) = h^{(M)}_{0}(\tau)\exp(\beta^{(M)}_1\hat{\eta}^{(1)}_{i} + \beta^{(M)}_2\hat{\eta}^{(2)}_{i} + \beta^{(M)}_3\hat{\eta}^{(3)}_{i}), \quad i \in \mathcal{D}_2 \]

Finally, the base learners are refit on all \(\mathcal{D}\) but keeping \(\hat{\boldsymbol{\beta}}^{(M)}\) and \(\hat{h}^{(M)}_0\) from the meta-model fixed.

Predictions for a new observation, \(\mathbf{x}^*\) are made by first predicting \((\hat{\eta}_*^{(1)},\hat{\eta}_*^{(2)},\hat{\eta}_*^{(3)})\) from the trained base models and passing those to \(h_M\) using the fitted \(\hat{\boldsymbol{\beta}}^{(M)}\) coefficients and \(\hat{h}^{(M)}_0\).

In the above example, Cox models are used throughout. However, any machine learning models with the same prediction types can be stacked, including combinations of models.